Amazon SageMaker Machine Learning Automation

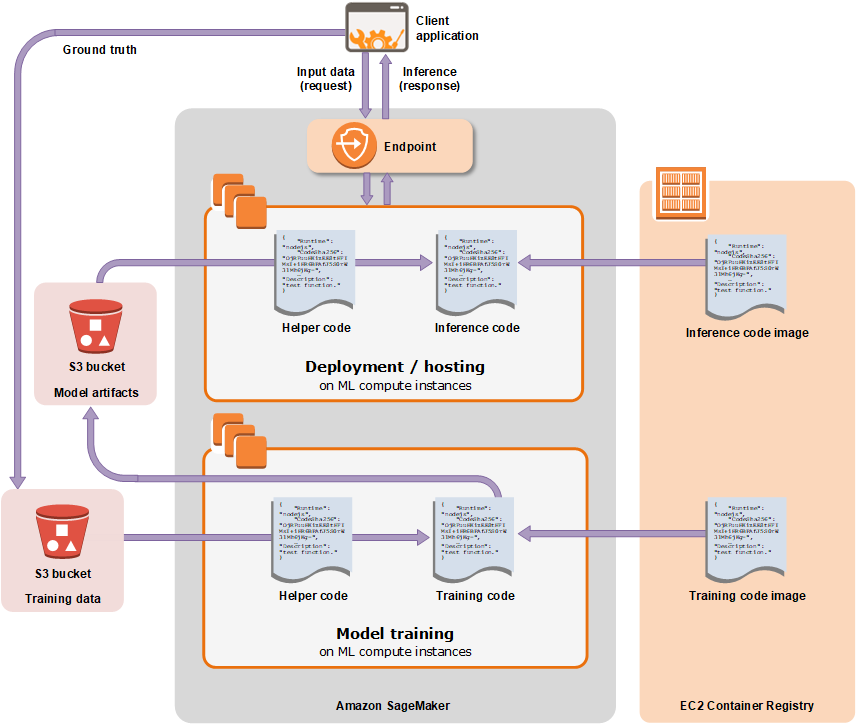

Amazon SageMaker is a fully managed service that provides every developer and data scientist with the ability to build, train, and deploy machine learning (ML) models automatically. SageMaker enables developers to operate at a number of levels of abstraction when training and deploying machine learning models.

At its highest level of abstraction, SageMaker provides pre-trained ML models that can be deployed as-is. In addition, SageMaker provides a number of built-in ML algorithms that developers can train on their own data. Further, SageMaker provides managed instances of TensorFlow and Apache MXNet, where developers can create their own ML algorithms from scratch. Regardless of which level of abstraction is used, a developer can connect their SageMaker-enabled ML models to other AWS services, such as the Amazon DynamoDB database for structured data storage,AWS Batch for offline batch processing or Amazon Kinesis for real-time processing.

A number of interfaces are available for developers to interact with SageMaker. First, there is a web API that remotely controls a SageMaker server instance. While the web API is agnostic to the programming language used by the developer, Amazon provides SageMaker API bindings for a number of languages, including Python, JavaScript, Ruby, and Java. In addition, SageMaker provides managed Jupyter Notebook instances for interactively programming SageMaker and other applications.

Amazon SageMaker makes it easy to deploy your trained model into production with a single click so that you can start generating predictions for real-time or batch data. You can one-click deploy your model onto auto-scaling Amazon ML instances across multiple availability zones for high redundancy. Just specify the type of instance, and the maximum and minimum number desired, and SageMaker takes care of the rest. SageMaker will launch the instances, deploy your model, and set up the secure HTTPS endpoint for your application. Your application simply needs to include an API call to this endpoint to achieve low latency, high throughput inference. This architecture allows you to integrate your new models into your application in minutes because model changes no longer require application code changes.

Deploying with SageMaker

The development

Depending on where you are in your ML journey, or indeed your use case, you may choose to develop your own ML model rather than an off-the-shelf model. The next (also optional) step is to train the model. You can save any resulting model files to S3 for later user. You then need to write the inference code to build your API endpoint, that will serve the requests made to the model.

To speed up future development, as well to make it consistent and repeatable, you may choose to create a ‘base’ Docker image with everything (but only what) you need for each project, and use that as the base for each new Dockerfile. Docker compose also comes in handy, especially for building and running locally to test before deploying.

Setting up the endpoint

When you’re ready to expose your model to the world, there will be three main steps to deploying your model with SageMaker: configuring the model, creating the endpoint configuration, and configuring the endpoint itself.

Create the model

As we configure the model in SageMaker, we provide the location of the model artefacts and then the location of the inference code .This might seem like a lot of work, a little laborious and likely to be error prone.

The git commit triggers a CodeBuild project to start a job to package up the code as part of a Docker image. You could include the Dockerfile as part of the developer’s code repository, or fetch a standardized one from somewhere else.

The same CodeBuild project can even be used to make SageMaker SDK calls to configure the model in SageMaker, create the endpoint configuration and create the endpoint. You may also choose to specify where the model artefacts are located in S3 as part of the SageMaker configuration steps, instead of packaging them up in the Docker image.

Creating the endpoint configuration

This is where we start to do a bit more dynamic configuration – the production variants. This means firstly selecting the model that we just configured, you can even select multiple models as variants and apply weightings for A/B testing. Continue by selecting the instance type use, deciding how many instances to deploy, choose whether or not to enable elastic inference, and a few other small details.

Instance types

This is to the compute instance type to use, not dissimilar to launching a regular EC2 instance. Instance types are grouped into families based on core capabilities of the host computer hardware such as compute, memory, storage etc. Valid options can be found in the online documentation or the drop down list in the console. As of the current date, the available types include t2, m4, c4, c5, p2, and p3 instance classes each of varying capacity.

The p2 and p3 instances come under the Accelerated Computing family, launched with an attached GPU by default – useful for use cases such as deep learning.

Elastic inference

You may have some workloads that can benefit from the abilities of a GPU, but not require their use 24/7. Provisioning them anyway is financially inefficient, but luckily AWS introduced something called elastic inference (EI.) The EI provides acceleration when only required, and is automatically started when the supported frameworks are being used.

Latest supported frameworks can be found in the usual documentation but as of today some include EI-enabled versions of TensorFlow and MXNet. Although using a different deep learning framework, simply export your model by using ONNX, and then important your model into MXNet – use your model with EI as an MXNet model!

Configuring the endpoint

The last step in the process is to create an endpoint in SageMaker and apply the endpoint configuration you just created to it. You can reconfigure your endpoint later by creating new endpoint configuration and applying that to your endpoint instead.

Making it scale

SageMaker does do a lot of the heavy lifting for us already, but you may have noticed there are quite a few steps involved in the processes so far. To build a platform that enables users to autonomous and self-sufficient, we should probably abstract some of that away, so that they can focus on their domain knowledge.

Amazon SageMaker Studio

Amazon SageMaker Studio provides a single, web-based visual interface where you can perform all ML development steps. SageMaker Studio gives you complete access, control, and visibility into each step required to build, train, and deploy models. You can quickly upload data, create new notebooks, train and tune models, move back and forth between steps to adjust experiments, compare results, and deploy models to production all in one place, making you much more productive. All ML development activities including notebooks, experiment management, automatic model creation, debugging, and model drift detection can be performed within the unified SageMaker Studio visual interface.

Amazon SageMaker Autopilot

Amazon SageMaker Autopilot is the industry’s first automated machine learning capability that gives you complete control and visibility into your ML models. Typical approaches to automated machine learning do not give you the insights into the data used in creating the model or the logic that went into creating the model. As a result, even if the model is mediocre, there is no way to evolve it. Also, you don’t have the flexibility to make trade-offs such as sacrificing some accuracy for lower latency predictions since typical automated ML solutions provide only one model to choose from.

SageMaker Autopilot automatically inspects raw data, applies feature processors, picks the best set of algorithms, trains and tunes multiple models, tracks their performance, and then ranks the models based on performance, all with just a few clicks. The result is the best performing model that you can deploy at a fraction of the time normally required to train the model. You get full visibility into how the model was created and what’s in it and SageMaker Autopilot integrates with Amazon SageMaker Studio. You can explore up to 50 different models generated by SageMaker Autopilot inside SageMaker Studio so its easy to pick the best model for your use case. SageMaker Autopilot can be used by people without machine learning experience to easily produce a model or it can be used by experienced developers to quickly develop a baseline model on which teams can further iterate.

Amazon SageMaker Ground Truth

Successful machine learning models are built on the shoulders of large volumes of high-quality training data. But, the process to create the training data necessary to build these models is often expensive, complicated, and time-consuming. Amazon SageMaker Ground Truth helps you build and manage highly accurate training datasets quickly. Ground Truth offers easy access to labelers through Amazon Mechanical Turk and provides them with pre-built workflows and interfaces for common labeling tasks. You can also use your own labelers or use vendors recommended by Amazon through AWS Marketplace. Additionally, Ground Truth continuously learns from labels done by humans to make high quality, automatic annotations to significantly lower labeling costs.

Amazon SageMaker Experiments

Amazon SageMaker Experiments helps you organize and track iterations to machine learning models. Training an ML model typically entails many iterations to isolate and measure the impact of changing data sets, algorithm versions, and model parameters. You produce hundreds of artifacts such as models, training data, platform configurations, parameter settings, and training metrics during these iterations. Often cumbersome mechanisms like spreadsheets are used to track these experiments.

SageMaker Experiments helps you manage iterations by automatically capturing the input parameters, configurations, and results, and storing them as ‘experiments’. You can work within the visual interface of SageMaker Studio, where you can browse active experiments, search for previous experiments by their characteristics, review previous experiments with their results, and compare experiment results visually.

The ML training process is largely opaque and the time it takes to train a model can be long and difficult to optimize. As a result, it is often difficult to interpret and explain models. Amazon SageMaker Debugger makes the training process more transparent by automatically capturing real-time metrics during training such as training and validation, confusion matrices, and learning gradients to help improve model accuracy.

SageMaker Debugger

The metrics from SageMaker Debugger can be visualized in SageMaker Studio for easy understanding. SageMaker Debugger can also generate warnings and remediation advice when common training problems are detected. With SageMaker Debugger, you can interpret how a model is working, representing an early step towards model explainability.

Amazon SageMaker Model Monitor

Amazon SageMaker Model Monitor allows developers to detect and remediate concept drift. Today, one of the big factors that can affect the accuracy of deployed models is if the data being used to generate predictions differs from data used to train the model. For example, changing economic conditions could drive new interest rates affecting home purchasing predictions. This is called concept drift, whereby the patterns the model uses to make predictions no longer apply. SageMaker Model Monitor automatically detects concept drift in deployed models and provides detailed alerts that help identify the source of the problem. All models trained in SageMaker automatically emit key metrics that can be collected and viewed in SageMaker Studio. From inside SageMaker Studio you can configure data to be collected, how to view it, and when to receive alerts.

Many machine learning applications require humans to review low confidence predictions to ensure the results are correct. But, building human review into the workflow can be time consuming and expensive involving complex processes. Amazon Augmented AI is a service that makes it easy to build the workflows required for human review of ML predictions. Augmented AI provides built-in human review workflows for common machine learning use cases. You can also create your own workflows for models built on Amazon SageMaker. With Augmented AI, you can allow human reviewers to step in when a model is unable to make high confidence predictions.

Amazon SageMaker Components

Amazon SageMaker Components for Kubeflow Pipelines, now in preview, are open-source plugins that allow you to use Kubeflow Pipelines to define your ML workflows and use SageMaker for the data labeling, training, and inference steps. Kubeflow Pipelines is an add-on to Kubeflow that let you build and deploy portable and scalable end-to-end ML pipelines. However, when using Kubeflow Pipelines, ML ops teams need to manage a Kubernetes cluster with CPU and GPU instances and keep its utilization high at all times to reduce operational costs. Maximizing the utilization of a cluster across data science teams is challenging and adds additional operational overhead to the ML ops teams.

As an alternative to an ML optimized Kubernetes cluster, with Amazon SageMaker Components for Kubeflow Pipelines you can take advantage of powerful SageMaker features such as data labeling, fully managed large-scale hyperparameter tuning and distributed training jobs, one-click secure and scalable model deployment, and cost-effective training through EC2 Spot instances without needing to configure and manage Kubernetes clusters specifically to run the machine learning jobs.

As an alternative to an ML optimized Kubernetes cluster, with Amazon SageMaker Components for Kubeflow Pipelines you can take advantage of powerful SageMaker features such as data labeling, fully managed large-scale hyperparameter tuning and distributed training jobs, one-click secure and scalable model deployment, and cost-effective training through EC2 Spot instances without needing to configure and manage Kubernetes clusters specifically to run the machine learning jobs.

Kubernetes is an open source system used to automate the deployment, scaling, and management of containerized applications. Many customers want to use the fully managed capabilities of Amazon SageMaker for machine learning, but also want platform and infrastructure teams to continue using Kubernetes for orchestration and managing pipelines. SageMaker lets users train and deploy models in SageMaker using Kubernetes operators.

In most deep learning applications, making predictions using a trained model – a process called inference – can be a major factor in the compute costs of the application. A full GPU instance may be over-sized for model inference. In addition, it can be difficult to optimize the GPU, CPU, and memory needs of your deep-learning application. Amazon Elastic Inference solves these problems by allowing you to attach just the right amount of GPU-powered inference acceleration to any Amazon EC2 or Amazon SageMaker instance type or Amazon ECS task with no code changes. With Elastic Inference, you can choose the instance type that is best suited to the overall CPU and memory needs of your application, and then separately configure the amount of inference acceleration that you need to use resources efficiently and to reduce the cost of running inference.

Amazon SageMaker Python SDK

Amazon SageMaker Python SDK is an open source library for training and deploying machine-learned models on Amazon SageMaker.With the SDK, you can train and deploy models using popular deep learning frameworks, algorithms provided by Amazon, or your own algorithms built into SageMaker-compatible Docker images.

SageMaker Debugger provides a way to hook into the training process and emit debug artifacts (a.k.a. “tensors”) that represent the training state at each point in the training lifecycle. Debugger then stores the data in real time and uses rules that encapsulate logic to analyze tensors and react to anomalies. Debugger provides built-in rules and allows you to write custom rules for analysis.

Amazon SageMaker Application

Tens of thousands of customers utilize Amazon SageMaker to help accelerate their machine learning deployments, including ADP, AstraZeneca, Avis, Bayer, British Airways, Cerner, Convoy, Emirates NBD, Gallup, Georgia-Pacific, GoDaddy, Hearst, Intuit, LexisNexis, Los Angeles Clippers, NuData (a Mastercard Company), Panasonic Avionics, The Globe and Mail, and T-Mobile.

Since launch, AWS has regularly added new capabilities to Amazon SageMaker, with more than 50 new capabilities delivered in the last year alone, including Amazon SageMaker Ground Truth to build highly accurate annotated training datasets, SageMaker RL to help developers use a powerful training technique called reinforcement learning, and SageMaker Neo which gives developers the ability to train an algorithm once and deploy on any hardware. These capabilities have helped many more developers build custom machine learning models. But just as barriers to machine learning adoption have been removed by Amazon SageMaker, customers’ desire to utilize machine learning at scale has only increased.

About Amazon Web Services

Amazon Web Services is the world’s most comprehensive and broadly adopted cloud platform. AWS offers over 165 fully featured services for compute, storage, databases, networking, analytics, robotics, machine learning and artificial intelligence (AI), Internet of Things (IoT), mobile, security, hybrid, virtual and augmented reality (VR and AR), media, and application development, deployment, and management from 69 Availability Zones (AZs) within 22 geographic regions, with announced plans for 13 more Availability Zones and four more AWS Regions in Indonesia, Italy, South Africa, and Spain. Millions of customers—including the fastest-growing startups, largest enterprises, and leading government agencies—use AWS to power their infrastructure, become more agile, and lower costs.

About Amazon

Amazon is guided by four principles: customer obsession rather than competitor focus, passion for invention, commitment to operational excellence, and long-term thinking. Customer reviews, 1-Click shopping, personalized recommendations, Prime, Fulfillment by Amazon, AWS, Kindle Direct Publishing, Kindle, Fire tablets, Fire TV, Amazon Echo, and Alexa are some of the products and services pioneered by Amazon.

Leave a Reply