H2O Driverless AI is an artificial intelligence (AI) platform that automates some of the most difficult data science and machine learning workflows such as feature engineering, model validation, model tuning, model selection and model deployment. It aims to achieve highest predictive accuracy, comparable to expert data scientists, but in much shorter time thanks to end-to-end automation. Driverless AI also offers automatic visualizations and machine learning interpretability (MLI).

H2O Driverless AI Platform

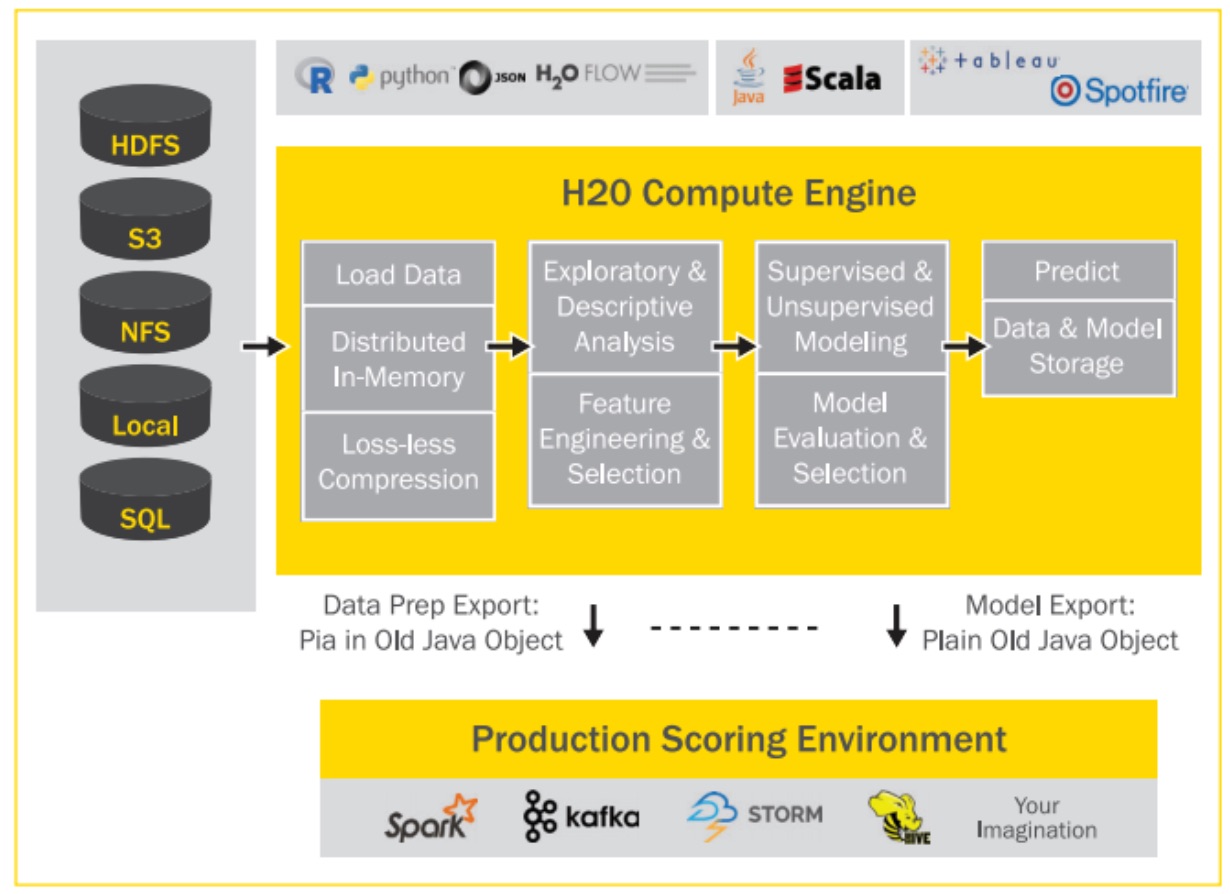

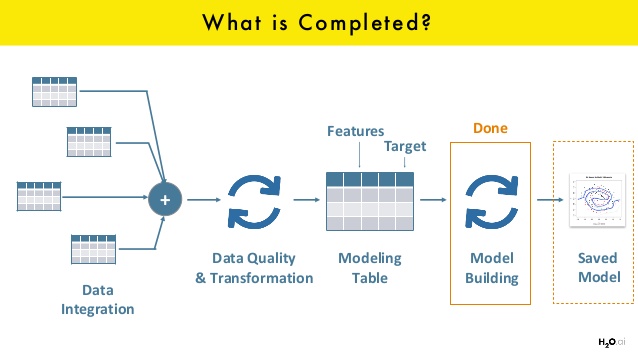

H2O Driverless AI is a high-performance, GPU-enabled computing platform for automatic development and rapid deployment of predictive analytics models. It reads tabular data from plain text sources and from a variety of external data sources, and it automates data visualization and the construction of predictive models.

Driverless AI also includes Machine Learning Interpretability (MLI), which incorporates a number of contemporary approaches to increase the transparency and accountability of complex models by providing model results in a human-readable format.

Driverless AI targets business applications such as loss-given-default, probability of default, customer churn, campaign response, fraud detection, anti-money-laundering, demand forecasting, and predictive asset maintenance models.

Key features

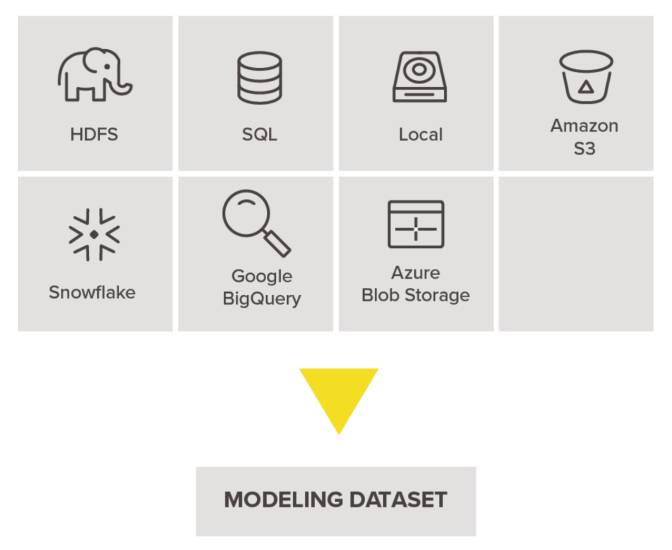

Flexibility of Data and Deployment: Driverless AI works across a variety of data sources including Hadoop HDFS, Amazon S3, and more. Driverless AI can be deployed everywhere including all clouds (Microsoft Azure, AWS, Google Cloud) and on premises on any system, but it is ideally suited for systems with GPUs, including IBM Power 9 with GPUs built in.

NVIDIA GPU Acceleration: The platform is optimized to take advantage of GPU acceleration to achieve up to 40X speedups for automatic machine learning. It includes multi-GPU algorithms for XGBoost, GLM, K-Means, and more. GPUs allow for thousands of iterations of model features and optimizations.

Automatic Data Visualization (Autovis): Driverless AI automatically selects data plots based on the most relevant data statistics, generates visualizations, and creates data plots that are most relevant from a statistical perspective based on the most relevant data statistics.

Automatic Feature Engineering: Software employs a library of algorithms and feature transformations to automatically engineer new, high value features for a given dataset. Included in the interface is an easy-to-read variable importance chart that shows the significance of original and newly engineered features.

Automatic Model Documentation: Driverless AI provides an Autoreport (Autodoc) for each experiment, relieving the user from the time-consuming task of documenting and summarizing their workflow used when building machine learning models. The Autoreport includes details about the data used, the validation schema selected, model and feature tuning, and the final model created. With this capability in Driverless AI, practitioners can focus more on drawing actionable insights from the models and save weeks or even months in development, validation, and deployment process.

Time Series Forecasting: Platform delivers superior time series capabilities to optimize for almost any prediction time window. Driverless AI incorporates data from numerous predictors, handles structured character data and high-cardinality categorical variables, and handles gaps in time series data and other missing values.

NLP with TensorFlow: Driverless AI automatically converts short text strings into features using powerful techniques like TFIDF. With TensorFlow, Driverless AI can also process larger text blocks and build models using all available data to solve business problems like sentiment analysis, document classification, and content tagging.

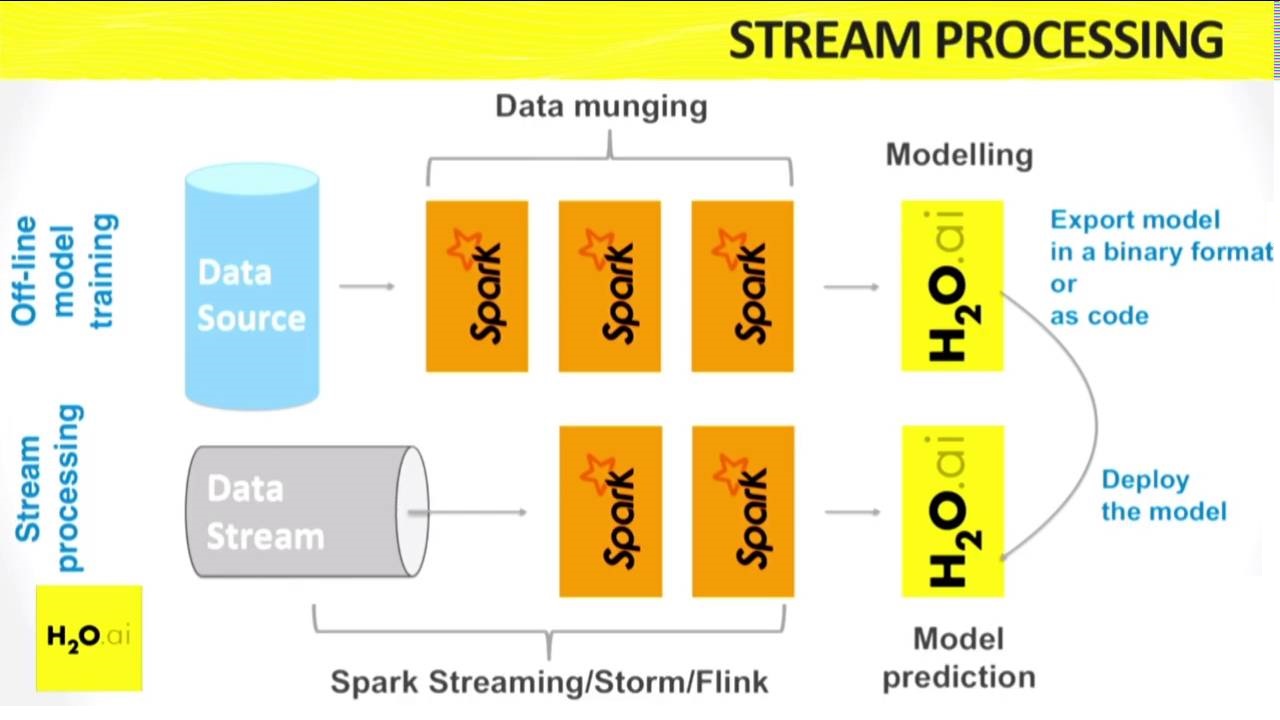

Automatic Scoring Pipelines: For completed experiments, it automatically generates both Python scoring pipelines and new ultra-low latency automatic scoring pipelines. The new automatic scoring pipeline is a unique technology that deploys all feature engineering and the winning machine learning model in a highly optimized, low-latency, production-ready Java code that can be deployed anywhere.

Machine Learning Interpretability (MLI): Driverless AI provides robust interpretability of machine learning models to explain modeling results in a human-readable format. In the MLI view, Driverless AI employs a host of different techniques and methodologies for interpreting and explaining the results of its models. A number of charts are generated automatically (depending on experiment type), including K-LIME, Shapley, Variable Importance, Decision Tree Surrogate, Partial Dependence, Individual Conditional Expectation, Sensitivity Analysis, NLP Tokens, NLP LOCO, and more.

Automatic Reason Codes: Reason codes show the key positive and negative factors in a model’s scoring decision in a simple language. Reasons codes are also useful in other industries, such as healthcare, because they can provide insights into model decisions that can drive additional testing or investigation.

Supported Algorithms

Constant Model – predicts the same constant value for any input data. The constant value is computed by optimizing the given scorer. A constant model is meant as a baseline reference model. If it ends up being used in the final pipeline, a warning will be issued because that would indicate a problem in the dataset or target column (e.g., when trying to predict a random outcome).

Decision Tree – single (binary) tree model that splits the training data population into sub-groups (leaf nodes) with similar outcomes. No row or column sampling is performed, and the tree depth and method of growth (depth-wise or loss-guided) is controlled by hyper-parameters.

FTRL – DataTable implementation of the FTRL-Proximal online learning algorithm. This implementation uses a hashing trick and Hogwild approach for parallelization. FTRL supports binomial and multinomial classification for categorical targets, as well as regression for continuous targets.

GLM – estimate regression models for outcomes following exponential distributions. GLMs are an extension of traditional linear models.

Isolation Forest – useful for identifying anomalies or outliers in data. Isolation Forest isolates observations by randomly selecting a feature and then randomly selecting a split value between the maximum and minimum values of that selected feature. This split depends on how long it takes to separate the points. Random partitioning produces noticeably shorter paths for anomalies. When a forest of random trees collectively produces shorter path lengths for particular samples, they are highly likely to be anomalies.

LightGBM – gradient boosting framework developed by Microsoft that uses tree based learning algorithms. It was specifically designed for lower memory usage and faster training speed and higher efficiency. Similar to XGBoost, it is one of the best gradient boosting implementations available. It is also used for fitting Random Forest, DART (experimental), and Decision Tree models inside of Driverless AI.

RuleFit – creates an optimal set of decision rules by first fitting a tree model, and then fitting a Lasso (L1-regularized) GLM model to create a linear model consisting of the most important tree leaves (rules).

TensorFlow – open source software library for performing high performance numerical computation. Driverless AI includes a TensorFlow NLP recipe based on CNN Deeplearning models.

XGBoost -supervised learning algorithm that implements a process called boosting to yield accurate models. Boosting refers to the ensemble learning technique of building many models sequentially, with each new model attempting to correct for the deficiencies in the previous model. In tree boosting, each new model that is added to the ensemble is a decision tree. XGBoost provides parallel tree boosting (also known as GBDT, GBM) that solves many data science problems in a fast and accurate way. For many problems, XGBoost is one of the best gradient boosting machine (GBM) frameworks today. Driverless AI supports XGBoost GBM and XGBoost DART (experimental) models.

MLI for H2O Driverless AI

Driverless AI provides robust interpretability of machine learning models to explain modeling results in a human-readable format. In the Machine Learning Interpetability (MLI) view, Driverless AI employs a host of different techniques and methodologies for interpreting and explaining the results of its models. A number of charts are generated automatically (depending on experiment type), including K-LIME, Shapley, Variable Importance, Decision Tree Surrogate, Partial Dependence, Individual Conditional Expectation, Sensitivity Analysis, NLP Tokens, NLP LOCO, and more.

Driverless AI Scoring Pipelines

Driverless AI provides several Scoring Pipelines for experiments and/or interpreted models. A standalone Python Scoring Pipeline is available for experiments and interpreted models. The Python Scoring Pipeline is implemented as a Python whl file. While this allows for a single process scoring engine, the scoring service is generally implemented as a client/server architecture and supports interfaces for TCP and HTTP.

A low-latency, standalone MOJO Scoring Pipeline is available for experiments, with both Java and C++ backends. The MOJO Scoring Pipeline provides a standalone scoring pipeline that converts experiments to MOJOs, which can be scored in real time. The MOJO Scoring Pipeline is available as either a Java runtime or a C++ runtime. For the C++ runtime, both Python and R wrappers are provided.

H2O AI customizations

The new bring-your-own-recipe capability ensures data scientists now can quickly customize and extend the Driverless AI to make their own AI with customizations – models, transformers, and scorers, extending the platform to meet any data science requirement. These customized recipes are then treated as first-class citizens in the automatic feature engineering process and eventually creating the winning model. With recipes domain experts now have more options to solve their data science problems and to make their own AI by enabling them to address a variety of use cases ranging from credit risk scoring, customer churn prediction, fraud detection, cyber threat prevention, sentiment analysis and more.

H2O also has the availability to set of vertical specific solutions: anti-money laundering, customer 360 & malicious domain detection. In addition, customers can explore and consume over 100 open-source recipes, curated by Kaggle Grandmasters at H2O.ai.

Project Workspace for Model Operations, Administration, Collaboration

Project Workspace in Driverless AI enables data scientists to collaborate on different projects, build models, tag and version them appropriately for DevOps and IT that can then deploy these models to different environments in a highly scalable and robust manner.

In addition, H2O has a module for Model Admin that enables models that are deployed to be monitored for system health checks and also data science metrics around drift detection, model degradation, A/B testing, and provide alerts for recalibration and retraining.

Explainable AI

H2O Driverless AI provides robust interpretability of machine learning models to explain modeling results. H2O Driverless AI now has added the ability to perform disparate impact analysis to test for sociological biases in models.

This new feature allows for users to analyze whether a model produces adverse outcomes for different demographic groups even if those features were not included in the original model. These checks are critical for regulated industries where demographic biases in the data can creep into models causing adverse effects on protected groups.

H2O Driverless AI provides interpretable-by-design models including linear models, monotonic gradient boosting and RuleFit. In its machine learning interpretability module, Driverless AI employs a host of different techniques and methodologies for explaining the results of its models like K-LIME, Shapley, variable importance, decision tree and partial dependence views.

Hardware and Driverless AI

Driverless AI runs on commodity hardware. It was also specifically designed to take advantage of graphical processing units (GPUs), including multi-GPU workstations and servers such as IBM’s Power9-GPU AC922 server and the NVIDIA DGX-1 for order-of-magnitude faster training.

BlueData

H2O.ai has an established partnership and several joint customers with BlueData. With the BlueData EPIC software platform, data science teams can instantly spin up Driverless AI running on containers—whether on-premises, in the public cloud, or in a hybrid model.

HPE ProLiant DL380 Gen10 Server

The industry-leading server for multi-workload compute, the secure, resilient 2P/2U HPE ProLiant DL380 Gen10 Server features up to three NVIDIA Tesla GPUs and delivers world-class performance and supreme versatility for running AI/ML workloads such as H2O Driverless AI.

HPE Apollo 6500 Gen10 System

This system is a 4U dual-socket server featuring up to eight NVIDIA Tesla GPUs is an ideal high-performance computing (HPC) and deep learning platform. HPE Apollo 6500 Gen10 System provides unprecedented performance with industry-leading NVIDIA GPUs, fast GPU interconnect, high-bandwidth fabric, and a configurable GPU topology to match your workloads.

Installing and Upgrading Driverless AI

For the best Driverless AI should be installed on modern data center hardware with GPUs and CUDA support. To simplify cloud installation, Driverless AI is provided as an AMI. To simplify local installation, Driverless AI is provided as a Docker image.

For native installs (rpm, deb, tar.sh), Driverless AI requires a minimum of 5 GB of system memory in order to start experiments and a minimum of 5 GB of disk space in order to run a small experiment. It is recommended to have lots of system CPU memory (64 GB or more) and 1 TB of free disk space available. For Docker installs, it is recommended 1TB of free disk space.

For running Driverless AI with GPUs, GPU should have compute capacity of at least 3.5 and at least 4GB of RAM. If these requirements are not met, then Driverless AI will switch to CPU-only mode.

Driverless AI supports local, LDAP, and PAM authentication. Authentication can be con_gured by setting environment variables or via a con_g.toml _le. Refer to the Setting Environment Variables section in the User Guide. Driverless AI also supports HDFS, S3, Google Cloud Storage, Google Big Query, KDB, Minio, and Snowake access.

About H2O.ai

H2O.ai is the open source leader in AI and automatic machine learning with a mission to democratize AI for everyone. H2O.ai is transforming the use of AI to empower every company to be an AI company in financial services, insurance, healthcare, telco, retail, pharmaceutical and marketing. H2O.ai is driving an open AI movement with H2O, which is used by more than 18,000 companies and hundreds of thousands of data scientists. H2O Driverless AI, an award winning and industry leading automatic machine learning platform for the enterprise, is helping data scientists across the world in every industry be more productive and deploy models in a faster, easier and cheaper way. H2O.ai partners with leading technology companies such as NVIDIA, IBM, AWS, Intel, Microsoft Azure and Google Cloud Platform and is proud of its growing customer base which includes Capital One, Nationwide, Walgreens and MarketAxess. H2O.ai believes in AI4Good with support for wildlife conservation and AI for academics.

Leave a Reply